Machine Learning Tools

Recently I went to the Omaha Learn Swift Meetup. Though it has Swift in the name, in reality the meetup is a 50/50 split of iOS and Android discussions. We had five people show up in person and three connecting remotely. There were two demos. The first was on the use of GitHub CoPilot with Android Studio and the second was on the usefulness of ChatGPT for analyzing and generating code. What follows are my perceptions of both of these.

GitHub CoPilot

Android Studio has had an official plugin available that allows direct interaction with CoPilot. To use it you simply create a comment that uses natural language to describe the functionality that you want. CoPilot will respond with a code suggestion that you can either use, or you can modify your comment to make changes to the generated code. This sounds pretty awesome, and it is, but from what I have seen it can have it’s issues. The code generated may have errors, or more often isn’t quite what the developer needed, so they have to keep modifying the comment to get something that is closer to what they need. This means that the developer needs to be pretty experienced to know whether the generated code is actually what they need. The dev doing the demo gave his opinion that it really just saves time typing out a lot of code, more of an advanced code completion, but with more advanced code it may not be worthwhile. I imagine this will change with better models in the future.

As for using CoPilot with iOS development, there is no Xcode plugin at this time, and there may never be due to Apple’s limitations on Xcode plugins. There is a workaround where you could use Visual Studio Code, but it’s rather clunky and inconvenient. Apple has evidently added Stable Diffusion support to Core ML, so maybe we will see something for ChatGPT soon as well.

ChatGPT

ChatGPT is a little different. It has two modes of operation: discussion and generation. In discussion you can hold a conversation with it about a specific subject, including asking it to ask you typical interview questions for a specific subject. I haven’t used this myself, but the dev giving the demo and at least one other person have tested this functionality.

Using generation mode is quite interesting. You can ask it to generate a piece of code that does a specific thing and it will give you a description of what it takes algorithm wise to accomplish the task, then writes a block of code to accomplish it.

I decided to test code generation by asking it to display a 3D cube using SwiftUI and SceneKit. It generated the following code:

import SwiftUI

import SceneKit

struct CubeView: View {

var body: some View {

let sceneView = SCNView()

let scene = SCNScene()

let cameraNode = SCNNode()

let cubeNode = SCNNode()

cameraNode.camera = SCNCamera()

cameraNode.position = SCNVector3(x: 0, y: 0, z: 5)

scene.rootNode.addChildNode(cameraNode)

cubeNode.geometry = SCNBox(width: 1, height: 1, length: 1, chamferRadius: 0.1)

cubeNode.position = SCNVector3(x: 0, y: 0, z: 0)

scene.rootNode.addChildNode(cubeNode)

sceneView.scene = scene

return sceneView

}

}

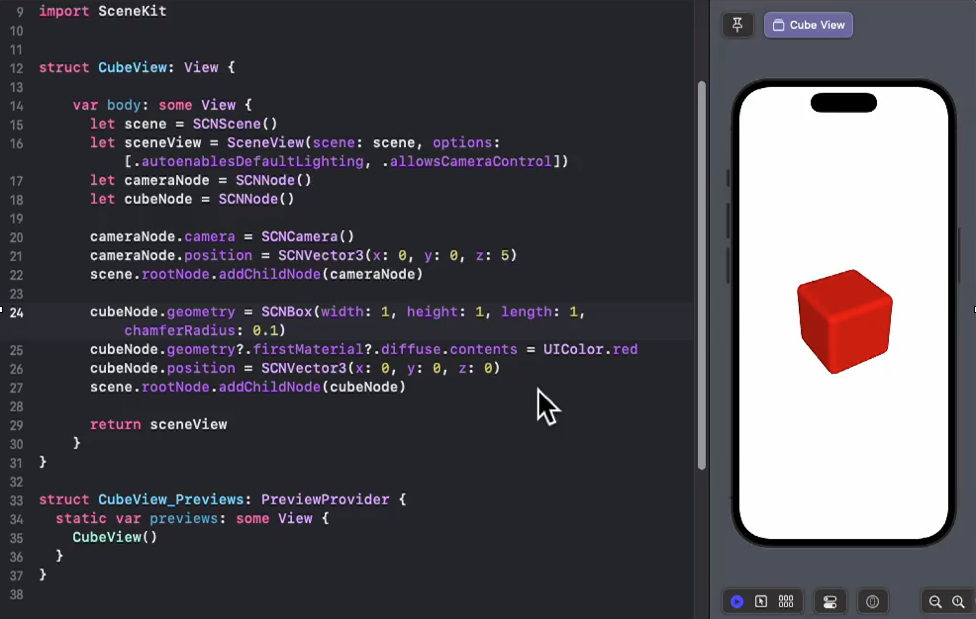

This code looks right, but it will not compile because sceneView doesn’t conform to View. So, that is fixed by using SceneView() for SwiftUI. SceneView() takes scene as an argument, as well as some options. For this demonstration I will use the .autoenablesDefaultLighting and .allowsCameraControl options. The function becomes this:

import SwiftUI

import SceneKit

struct CubeView: View {

var body: some View {

let scene = SCNScene()

let sceneView = SceneView(scene: scene, options: [.autoenablesDefaultLighting, .allowsCameraControl])

let cameraNode = SCNNode()

let cubeNode = SCNNode()

cameraNode.camera = SCNCamera()

cameraNode.position = SCNVector3(x: 0, y: 0, z: 5)

scene.rootNode.addChildNode(cameraNode)

cubeNode.geometry = SCNBox(width: 1, height: 1, length: 1, chamferRadius: 0.1)

cubeNode.position = SCNVector3(x: 0, y: 0, z: 0)

scene.rootNode.addChildNode(cubeNode)

return sceneView

}

}

So, ChatGPT was pretty close, but didn’t seem to understand that SwiftUI needed to use SceneView() instead of SCNView(). You could, of course, use SCNView, but that would require using UIViewRepresentable to convert it to a View, which would require more code than is necessary.

In short, though these tools cannot generate complete apps at this time, they look to be turning into time savers for experienced developers.